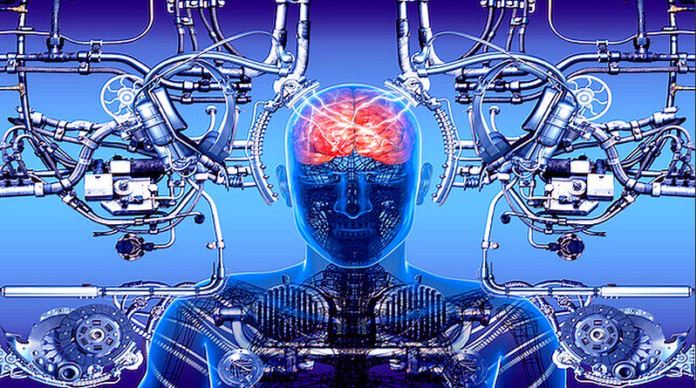

Movies like I, Robot, Wall-e and Bicentennial Man pose interesting questions that blur lines between man and machines. They not only allow our imaginations to soar high but somewhere also peek a hint of fear or uncertainty about the actual good of our scientific actions when man tries to play God!

However, with the latest developments in the AI sector it is safe to say, assassin robots or a trap of cyber-warp field like the Matrix, where they will enslave the creator of machines is still a distant and very unlikely future.

Perhaps it helps us to understand our very humanness better when try to translate these traits of thought and decision-making into our machinist creations! So, how far are we from having robot with morals?

Before we begin discussing how socializing of an AI or training it to have almost human-like consciousness can be helpful for science or for the greater good of humans, we must refer to this quote by Aristotle to understand our own disposition, where he states –

“All knowledge and each and every pursuit aim at some good”

However, he then also conversely adds “What then do people mean by ‘good’, thus further encapsulating the ethical dilemma. Since his time, this very statement has been discussed and debated rigorously now. The human race after our long history of barbarism, war, famine and poverty, has finally united in our collective interest towards acquiring greater knowledge and wisdom. We have now all agreed to work towards the good and the just. However, time has brought us at a crossroad where identifying what that entails is difficult.

We have studied chemogeny and biogeny that led to origin of life on earth, but never paid attention to the concept of cognogeny. Now that we step forward into the “cognitive era” with machines capable of thinking and taking decisions just like humans, the question of what should be the guiding star for our actions is gaining a newfound importance.

The burning question in the minds of scientists, engineers, and psychologists along with the rest of the average science-fluent human population is –

“If it is so difficult still, to ascribe a specific set of norms by which a person should abide to act justly and wise, then how we can take a leap forward to encode them into machines with “artificial intelligence” that we are making?”

And this question must be answered soon enough, if we are to take the next step in human evolution.

Morality vs. Cultural norms

This is yet another issue that we must contend with, and that is – we must decide as to not only which ethical principles must be encoded into our man-made machines to think like us, but also how to encode these ethics.

While for the most part, “Thou shalt not kill” remains a cornerstone of a principle for coding intelligence in AI. But what about the rare cases of use of AI like in the Secret Service, or if a robot is to be employed as a soldier or a bodyguard. After all, one man’s soldier is another man’s murderer! Thus, it is greatly a matter of preference, which mostly affects the context.

However, what is it that makes one principle a moral value while another, a cultural norm? That remains to be a question even the most acclaimed ethicists could not answer; but robotics personnel still need to encode those decisions into their algorithms.

While some cases will have some strict principles, and in other cases, there will merely be preferences based on context. As for some tasks, algorithms will need to be coded slightly differently according to whichever jurisdiction they may operate in.

The standards must be set higher

A lot of AI experts and industry insiders believe that we must set higher moral standards for our robots than we do for humans.

Tech industry giants such as Google, Amazon, IBM, and Facebook have recently set up partnerships to create an open platform between the leading AI companies and stakeholders in the government, academia, and industry to make advancements in understanding and promoting best practices. But that still remains a mere starting point.

By: Vivek Debuka

Source: bigdata-madesimple.com