The rapid pace of technological advancement should be celebrated and embraced. It fuels amazing new technologies and scientific achievements that make us more connected and safer. It also pushes the limits of what we previously thought possible. The impact of these achievements is no longer isolated to a narrow market vertical. It permeates every industry and exposes the established market incumbents to an unusual combination of disruption and growth potential.

But the pressure and the challenge to drive business impact are daunting in this climate. How do you stimulategrowth while making large investments in future technologies without dramatically changing your business model? Companies are watching their operational costs balloon as they dip their toes into numerous areas of investment that require significant and often disparate expertise. Meanwhile, small startups with incredible focus and no prior obligations can leverage new technologies in ways that established competitors struggle to answer.

So how do you protect yourself from disruption? How do you innovate without radically increasing the cost of doing business? It all boils down to one simple question: Do you feel secure in the tools you’re using?That’s the magic question, whether it’s your personal finances, career, or the engineering systems of the future. For instance, the Industrial Internet of Things ushers in a new era of both networked potential and significant risk. To best understand which software prepares you to most securely engineer future systems, you should turn to the recent past.

In2005, the previous three technological decades were defined by one simple observation made by the cofounder of Intel, Gordon Moore. Moore’s law was the prediction, based on the recent past, that the number of transistors per square inch on an integrated circuit would continue to double every 18 months. Seemingly linear growth was just the start of exponential growth. Before we knew it, CEOs from every semiconductor manufacturer talked about the number of parallel processing cores overthe next few years. Intel CEO Paul Otellini promised 80 core chips in the following fiveyears. The demand for more processing power with lower latency marched on. Alternative processing fabric emerged. First, the FPGA stormed into popularity with its software-defined timing and massively complex low-level programming languages. Next, heterogeneous processing was born when the traditional processor and the FPGA were combined onto a single chip.

Along with this explosion of processor architectures came a flood of new programming environments, programming languages, and open-source fads biding their time until the inevitable decline into oblivion. And, of course, thewhole burden of figuring out how to efficiently program the processors fell on you.

But now, we look to the future. Theexplosion of processing capabilities is leading us forward into a world of hyperconnectivity. And this world becomes more connected as engineering systems become more distributed. Trends like 5G and the Industrial Internet of Things promise to connect infrastructure, transportation, and the consumer network to enrich the lives of people the world over. It’s inarguable that software will be the defining aspect of any engineering system, if it’s not already. And it won’t be long before hardware becomes completely commoditized and the only distinguishing component of a system will be the IP that defines the logic.

Mosttest and measurement vendors have been slow to respond to theinevitable rise of softwareand are just now hitting the market with software environments that help the engineering community. But even those can only get you so far. As the industry continues to evolve,the tools engineers use to design these connected systems must meetfour key challenges: productivity through abstraction, software interoperability, comprehensive data analytics, and the efficient management of distributed systems.

Productivity Through Abstraction

Abstraction is one of those words that is so overused it’s in danger of losing its meaning. Simply put, it is making the complex common. In the world of designing engineering systems, complexity often comes from programming. The custom logic thataddsthe smart to smart systems typically requires a level of coding that’s often so complex, it’s what separates the prosfrom the amateurs. The complex must become common, though. To solve this challenge, engineers need a “programming optional” workflow that enables them to discover and configure measurement hardware, acquire real-world data, and then perform data analytics to turn that raw data into real insight. NI is introducing a new configuration-based workflow in the form of LabVIEW NXG. It is complemented by the graphical dataflow programming paradigm native to LabVIEW and known for acceleratingdeveloper productivity incomplex system design for nearly 30 years. With this configuration-based interaction style, you can progress from sensor connections all the way to the resulting action without the need for programming and stillconstruct the code modules behind the scenes. That last step is a critical feature that streamlines the transition from one-off insights into repeatable and automated measurements.

Software Interoperability

With the growing complexity of today’s solutions, the need to combine multiple software languages, environments, and approaches is quickly becoming ubiquitous. However, the cost of integrating these softwarecomponents is considerable and continues to increase. Languages for specialized hardware platforms must be integrated with other languages as these compute platforms are being combined into single devices. The solution to this is typically the design team assuming the burden of integration. However, this is essentially just treating the symptoms and not addressing the root cause. The software vendors must fix the root cause.

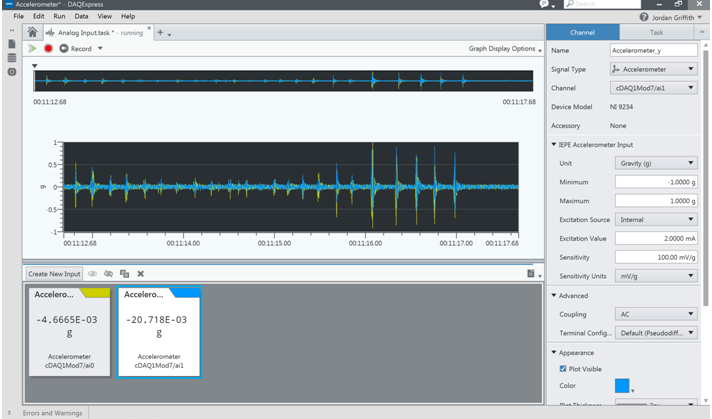

By design, NI’s software-centric platform placesthis software interoperability at the forefront of the development process. ThoughLabVIEW has been at the center of this software-centric approach, many complementary software products from other companies are individually laser-focused on specific tasks, such as test sequencing, hardware-in-the-loop prototyping, server-based data analytics, circuit simulation for teaching engineers, and online asset monitoring. These products are purposefully limited to the common workflows of theengineers and technicians performing those tasks. This characteristic is shared with other software in the industry tailored to the same purpose. However, for NI software, LabVIEW providesultimate extensibility capabilities through an engineering-focused programming language that defies the limitations of tailored software. For example, consider DAQExpress™.

DAQExpress isnew companion software for USB and low-cost plug-in NI data acquisition hardware that drastically simplifies the discovery and configuration of hardwareandprovidesaccess to live data in two clicks. All the configuration “tasks” within this product are fully transferrable to LabVIEW NXG, which simplifies the transition from hardware configuration to measurement automation.

In addition to interoperating within the NI platform, products like LabVIEW 2017 feature enhanced interoperability with IP and standard communications protocols. For embedded systems that need to interoperate with industrial automation devices, LabVIEW 2017 includes native support for IEC 61131-3, OPC-UA, and the secure DDS messaging standard. It also offers new interactive machine learning algorithms and native integration with Amazon Web Services.

Beyond the individual innovation within each of these products, the collection represents the fruition of NI’s commitment to its on going investment in software. This unique combination of software products and their inherent interoperability separates NI’s platform from the rest. Other vendors are just now figuring out that software is the key, but NI’s investment in software has steadily increased over the last 30 years.

Comprehensive Data Analytics

Perhaps the most prolific benefit of the mass connectedness between the world’s systems is the ability to instantaneously access data and analyze every data point you collect. This process is critical to automating decisionmaking and eliminating preventabledelays in the necessary corrective action when data anomalies happen. To create the future network that can support this need, billions of dollars are being poured into research as algorithm experts from around the globe race to meet the demands of 1ms latency coupled with 10 Gbps throughput. This direction introduces new demands on software. The first is to ensure that the processing elements can be easily deployed across a wide variety of processing architecturesand then redeployed on a different processor with minimal (hopefully zero) rework. The second is to be open enough to now interface with data from an infinite number of nodes and via an infinite number of data formats.

NI has invested in server products that allow you to intelligently and easily standardize, analyze, and report on large amounts of data across your entire test organization. A key component is providing algorithms to preprocess files and automatically standardize items such as metadata, units, and file types in addition to performing basic analysis and data quality checks. Based on that data’s contents, the software can then intelligently choose which script gets run. This type of interface is critical to eliminating the complexity of real-time data analytics so you can focus on what matters: the data.

Distributed Systems Management

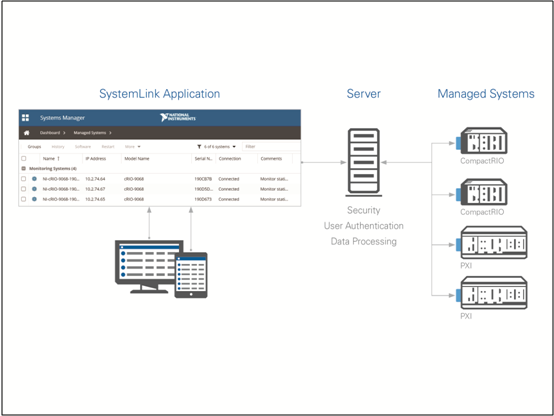

The mass deployment and connectedness of these systemshave renewed the need to efficiently manage all the distributed hardware from a centralized—and often remote—location. Today, this typicallyrequires replicating single-point deployments across hundreds, and even thousands, of systems. Centralizing the management then leads to the ability to see a real-time dashboard of the hardware from the remote depot instead of physically accessing the system.

SystemLink is innovative new software from NI thathelps you centralize the coordination of a system’s device configuration, software deployment, and data management. This reducesthe administrative burden and logistical costs associated with systems management functions. The software also improves test and embedded system uptime by promoting awareness of operational state and health criteria. It simplifies managing distributed systems and provides APIs from LabVIEW and other software languagessuch as C++.

Ask Yourself Again

Beyond the individual innovation within each of these product releases, the collection represents theculmination of the ongoing investment in software that NI has proven committed to year after year. The unique combination of software products and their inherent interoperability separates NI’s platform from the rest. From discovering the Higgs boson particletodecreasing test times by 100Xfor Qualcomm to being Nokia’s and Samsung’s solution of choice for their 5G research, NI’s software-centric platform is the building block that engineers use to solve the most complex challenges in the world.

Ask yourself again: How secure do you feel in the tools you’re using?