Serving next-generation, AI-driven applications requires a dramatic rethink of traditional data center design. Data center infrastructure must evolve rapidly – becoming not only larger, but smarter, faster, and greener. Every connection system and square foot now counts in the race to keep up with exponential demand.

Artificial intelligence (AI) has moved from hype to headline, impacting everything from health diagnostics to financial analysis. While the public marvels at AI breakthroughs, the engines powering these advances — the world’s data centers — face growing, behind-the-scenes challenges. As organizations expand their AI capabilities, the energy needed to support modern computing infrastructure is rising at an unprecedented rate.

Current research projects that global data center power demand will increase by 50% as soon as 2027 and 165% by 2030, with much of this surge attributed to AI workloads’ explosive growth. Already, data centers account for approximately 2% of worldwide electricity consumption and forecasts suggest this share will continue its upward march. The resulting strain extends beyond server rooms; it is currently reshaping energy supply chains, policy priorities, and environmental strategy across industries.

Rising to the infrastructure challenge

Serving next-generation, AI-driven applications requires a dramatic rethink of traditional data center design. Historically, a data center’s infrastructure balanced a mix of physical and virtual resources — servers, storage, networking, power distribution units, cooling systems, security protocols, and supporting elements like racks and fire suppression — all engineered for reliability and uptime.

AI’s energy-hungry, compute-intensive tasks have shattered these historical balances. Data Centers today must deliver far more power to denser racks, operate reliably under heavier loads, and deploy new capacity at speeds unimaginable even a decade ago. These requirements are putting immense pressure on every inch of physical infrastructure, from the electrical grid connection to the server cabinet.

Navigating power and cooling demands

One of the most acute challenges is arising from escalating power and cooling needs. Where historical rack architecture required 16 or 32A, current designs push 70, 100, or even 200A, often in the same amount of physical rack space. These giant increases not only generate more heat, but require thicker, less flexible power cabling, raising new problems for deployment and ongoing maintenance.

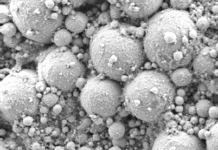

Efficiently removing heat from ever-denser configurations is a major engineering feat. Next-generation cooling technologies — ranging from liquid cooling to full-system immersion — are becoming essential rather than optional. At the same time, every connection point and cable run becomes a potential source of inefficiency or risk. Operators can no longer afford energy loss, heat generation, or even the downtime that results from outdated power distribution or poorly optimized layouts.

The space and scalability constraint

AI workloads are increasingly mission-critical. Even short interruptions in data center uptime can lead to significant financial loss or damaging outages for users and services. With power loads climbing fast and every square foot optimized, the need for trustworthy, quickly serviceable infrastructure grows more urgent. Reliable system operation is now a defining competitive factor for data center operators.

To complicate matters further, capacity needs are accelerating, but available space remains finite. In many regions, the cost and scarcity of real estate forces data centers to pack as much compute and power as possible into smaller footprints. As higher-power architectures proliferate, the infrastructure supporting them — from power to networking — must become more compact and adaptable, maintaining robust operation without sacrificing maintainability or safety.

Because new workloads can spike unpredictably, data center leaders now require infrastructure that can be rapidly scaled up, upgraded, or reconfigured, sometimes within days rather than months. The traditional model of labor-intensive rewiring is proving unsustainable in today’s emerging reality.

Sustainability in the spotlight

Environmental scrutiny from regulators, investors, and end-users places data centers at the heart of the global decarbonization agenda. Facilities must now integrate renewable energy, maximize electrical efficiency, and minimize overall carbon footprints while delivering more power each year. But achieving these goals demands holistic change from energy procurement and grid strategy down to every connector, cable, and cooling loop inside the facility.

The challenges of the AI era are being met with new ideas at every level of the data center: smarter Data Centers Building Management Systems now orchestrate lighting, thermal control, and energy use with unprecedented efficiency; cooling technologies are evolving quickly, as operators push beyond the limits of traditional air-based systems; advanced power distribution and grid connectivity solutions are enabling better load balancing, more reliable energy supply, and easier renewable integration.

Within this broad transformation, the move towards modular, plug-and-play connections — sometimes called connectorization — is having a dramatic impact. Unlike hardwired installations — which are slow to deploy, often hard to scale and maintain, and require specialized labor that is often unavailable – connectorized infrastructure supports pre-assembled, pre-tested units that can be installed in days rather than weeks and by the workforce that is already available on-site. This not only gets new capacity online faster, but also reduces the opportunity for error, simplifies expansions, and supports higher power throughput within constrained spaces.

Connectors designed for current and future demands minimize heat and energy loss, enhance reliability, and simplify upgrades. Maintenance is easier and faster, with less need for specialized expertise and less operational downtime. These modular technologies are also helping data centers better optimize their architecture, manage complex workloads, and future-proof their operations.

Cooperation and adaptation in a complex landscape

Modernizing data center infrastructure is not simply a technical challenge, but one that requires broad collaboration between technology vendors, utilities, cloud providers, regulators, and policymakers. Federal incentives, innovative funding, and public-private partnerships are all working in support of grid modernization efforts, while the need for flexibility in design and operation allows data centers to adapt to regional differences in energy supply, regulation, and demand.

While AI has redefined what is possible, it has also redefined what is required behind the scenes. Data center infrastructure must evolve rapidly — becoming not only larger, but smarter, faster, and greener. Every connection system and square foot now counts in the race to keep up with exponential demand.