Test and Measurement for Post-Quantum Secure Systems:

The anticipated explosion in quantum computing capabilities is poised to redefine the landscape of digital security. As quantum processors edge closer to practical realization, the cryptographic foundations that secure today’s communications, financial systems, and digital identities face an existential threat. This looming milestone—often referred to as Q-Day—marks the point at which quantum computers will be capable of breaking widely used public-key cryptosystems such as RSA and Elliptic Curve Cryptography (ECC), rendering much of the world’s encrypted data vulnerable to decryption. Data harvested today can be decrypted tomorrow, a threat known as “harvest now, decrypt later.” In response, governments, standards bodies, and enterprises are accelerating efforts to adopt quantum-safe technologies that can withstand the computational power of quantum machines.

Quantum – safe networks

Quantum-safe technologies must not only be secure they must also be interoperable, resilient, migratable, and able to perform in real-world environments. This is especially critical at the optical layer, where quantum signals are transmitted and received as well as at the post-quantum key encryption layer. The challenge is to move quantum technologies – particularly quantum optics – out of the lab and into the field, transforming them from scientific experiments into operational systems.

Digital encryption is a crucial component of any over-the-air communication systems like mobile telephony. Modern encryption methods are at risk of being broken with the rise of quantum computers. One solution to this issue is adopting PQC to safeguard sensitive data from the potential threats of quantum computing. The mobile standards body 3GPP is evolving its standards to incorporate PQC algorithms to defend against future quantum computer attacks. 3GPP relies on other standards bodies like National Institute of Standards and Technology (NIST)) for the standardized PQC algorithms. The concern about the potential attacks quantum computers may pose has prompted GSMA (Global System for Mobile Communications Association) to establish a Post-Quantum Telco Network Task Force (PQTN) to advise mobile operators on how to transition to post-quantum readiness.

Standards

Standardization efforts in QKD have been underway driven by the need to ensure interoperability, security assurance, and global adoption of quantum-safe communication systems. Organizations such as the European Telecommunications Standards Institute (ETSI), International Telecommunication Union (ITU-T), and ISO/IEC have led the charge in defining frameworks, protocols, and security requirements for QKD systems.

NIST is leading the way in defining PQC algorithms and industry alliances are forming with the Post-Quantum Cryptography Alliance (PQCA), and the Post-Quantum Telco Network Task Force (PQTN) being notable examples with wide-scale backing. GSMA provides guidelines for PQC and hybrid scenarios in the telecom industry.

NIST algorithms

Three algorithms have been standardized by NIST. Each serves a different function, with FIPS 203 and 204 using lattice-based techniques to respectively establish a secure connection and prove a message’s origin and authenticity, FIPS 205 is using hash-based cryptography to give an alternative approach to FIPS 204 – albeit a slower one with a larger signature size.

Adoption of these algorithms has been mandated by the NSA, and other national security bodies, with national security systems needing to complete the transition to PQC by 2030 – be it via software-coded algorithms (post quantum cryptography/PQC), hardware-based key exchange methods that use optical links (quantum key distribution/QKD) or a hybrid approach for transitional architectures.

Validating compliance

Implementing these algorithms is not enough. They have been demonstrated to be mathematically sound, but each implementation needs to be tested to ensure its compliance. The three-pronged (QKD, PQC and hybrid) nature of these means there is no one-size-fits-all approach to testing.

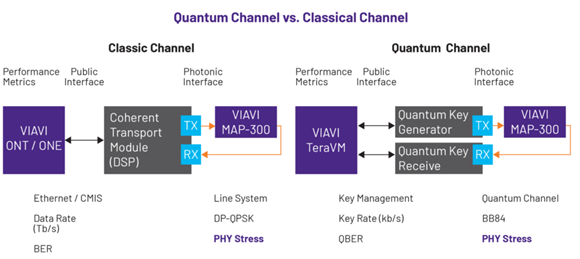

For QKD systems, we need to measure the qubit error rate (QBER) of the quantum channel being validated, with a high QBER being a potential indication of eavesdropping or channel degradation. Physical stress testing should also be undertaken to test the resiliency of QKD systems and its ability to perform self-regulation, using an emulated network and a range of controlled optical stresses to ensure continuous key generation. Such testing must also extend to the service layer to ensure the key management system can resiliently service applications.

The primary concern of PQC systems is the performance and architectural impact of using longer encryption keys – with algorithms tending to be more operationally heavy. Computational efficiency testing is therefore needed to examine encryption and key generation speed, as well as key size variations. Large-scale stress testing is needed here too – including the emulation of users and usage/traffic patterns to monitor KPIs such as latency and throughput under load. Finally, testing needs to include failure scenarios, for example mismatched PQC key selections, and the inability of one party to support PQC.

Finally, for hybrid systems we need to build on these techniques to take into consideration the increased complexity. Functional testing allows the validation of the quantum-classical interface and checks that timing and synchronization are stable. Security/resilience testing is also critical and should include the simulation of side-channel attacks, and the verification that the system will fall back to PQC if a QKD link fails.

Test and Measurement platforms for QKD, PQC, KMS and Hybrid Systems

Here we break down some of the core technologies that enable the testing and validation of post quantum implementations.

We begin with QKD systems, and their multi-layer testing process. For the physical optical layer, VIAVI has developed the MAP-300. This is a reconfigurable photonic system and is used to emulate a broad range of power and spectral loading scenarios found in live networks, thereby allowing engineers to validate the resiliency of new QKD systems. Specifically, the device is used to generate multiple quantum channel degradation events, including in-band noise from amplifiers, polarization disturbances, and reflection events, all of which would affect the quality of QKD signals and increase the QBER. Such emulation is needed to test the resiliency and efficiency of QKD systems in performing self-regulation protocols such as phase stabilization, adaptive error correction and privacy amplification tuning. These tests could be benchmarked in terms of the key generation rate to ensure that channel degradation events do not severely impact the key supply to the service layers.

For the service layer, a different platform is required, the TeraVM. This is used to both emulate clients that fetch post-quantum pre-shared keys (PPKs) and to test the quality of experience under load as keys are continuously rotated. Using such a system therefore ensures ETSI compliance and the validation of the QKD key management system’s resiliency.

Moving next to PQC systems, where the challenge is performance. Here TeraVM Security can be used to test the impact of the algorithms. This software-based tool is capable of stress testing VPN headends encrypted with the new PQC algorithms by emulating tens of thousands of users and their application traffic (collaboration tools, video conferences) and measuring the resulting latency, throughput, and MoS scores under load among other KPIs. The TeraVM Security also measures key size variations and re-keying rounds and other PQC-specific metrics. And for hybrid test scenarios, the system is able to test PQC-enabled initiators against a non-PQC responder to ensure a classic VPN tunnel is still established.

Moving beyond the cryptographic endpoints, Key Management Systems (KMS) become a critical component in operational quantum-safe deployments, where interoperability, scalability, crypto-agility, and key-lifecycle performance are often the limiting factors. Using VIAVI TeraVM operators can emulate large-scale, multi-vendor KMS environments and validate end-to-end key workflows across PQC-only, QKD-only, and hybrid PQC+QKD architectures. This includes stress testing algorithm agility—dynamically switching between classical, PQC, and hybrid cryptographic suites—while validating seamless fallback behavior and uninterrupted service continuity. The platform enables rigorous testing of key-lifecycle operations, including key generation, distribution, rotation, expiration, revocation, and recovery, under realistic application traffic, scaling events, and failure scenarios. Key KPIs such as key provisioning latency, re-keying cadence, control-plane overhead, synchronization accuracy, and policy enforcement are continuously measured. TeraVM further enables interoperability testing across heterogeneous KMS implementations and network elements; these capabilities deliver true end-to-end hybrid quantum-safe validation, ensuring that security remains cryptographically agile, operationally resilient, and performant at scale.

Finally, the physical fiber infrastructure itself must be secured and monitored. Here, the ONMSi Remote Fiber Test System can be used to continually scan the fiber network using OTDR to automatically detect and locate faults or degradation that could indicate fiber tapping. Distributed Temperature and Strain Sensing systems, can also be used to monitor for the strain and temperature changes caused by physical tampering, such as bending or cutting the fiber, which would signal a possible attack.

Transition to a quantum-safe future, securely and efficiently

As these technologies develop, ensuring that architectures and system deployments operate with maximum resilience, security, efficiency, and real-world reliability becomes crucial. This is particularly important at the optical layer, where quantum technologies intersect with physical infrastructure. In many cases, the challenge is to transform quantum science—especially quantum optics—from experimental setups into reliable, field-ready solutions. Standards and conformance testing are essential to this transition and trusted partners with both quantum innovation and deep fiber expertise are essential for success.