Generative AI in Microcontrollers | Generative AI can be heard everywhere today—whether it’s powering creative content generation or enabling intelligent human–machine interactions, its significance is undeniable. While the technology is often associated with the cloud and high-performance computing, Alif Semiconductor is pushing its boundaries by bringing this intelligence to the edge—tiny, power-efficient devices that operate far from data centers.

In this exclusive interview with Electronics Media, Pratibha Rawat, Technical Editor, speaks with Reza Kazerounian, President of Alif Semiconductor about their vision, strategy, and the future of edge AI in everyday devices.

1. What inspired Alif to bring Generative AI to microcontrollers? Was there a particular vision or strategy that made you believe this was the right move, and how did you imagine it could change things?

From the beginning, Alif has focused on anticipating where the market is heading rather than simply following trends. We saw the rapid rise of generative AI in the cloud and on powerful computing platforms, but also recognized that its true transformative potential would only be realized when it could run at the edge on the smallest, most power-efficient devices.

Our vision was to break down the barriers that kept advanced AI from reaching the billions of connected products that operate far from the data center, whether they are wearables, industrial sensors, or medical devices. We believed that bringing generative AI to microcontrollers would open the door to entirely new classes of applications, including devices that can understand, create, adapt, and interact in more natural and intelligent ways, all without depending on a constant network connection.

Strategically, this move aligns with our commitment to give developers freedom, scalability, and an open ecosystem. By integrating hardware acceleration for transformer models directly into our MCUs, we enable customers to run state-of-the-art AI workloads locally, reduce latency, strengthen privacy, and expand what is possible at the endpoint. We imagined, and are now seeing, use cases that were not feasible before, from real-time language processing to highly personalized user experiences in tiny, battery-powered devices.

2. Many expected on-device AI to be years away, yet we’re seeing it in products now. What factors are driving edge AI to hit devices faster than anticipated?

Several factors have accelerated the arrival of on-device AI. First, advances in neural processing technology have made it possible to deliver the performance needed for complex AI workloads within the tight power and size constraints of endpoint devices. At the same time, model architectures have become more efficient, allowing sophisticated capabilities like transformer networks to run on far smaller compute footprints than before.

Second, customer demand for lower latency, improved privacy, and reduced reliance on constant connectivity has grown significantly. Running AI locally means faster responses, enhanced security for sensitive data, and a better user experience, especially in scenarios where a network connection is unreliable or unavailable.

Third, the ecosystem around AI development has matured rapidly. Open-source frameworks, accessible toolchains, and the availability of pre-trained models have lowered the barrier for developers to create and deploy AI workloads at the edge.

Finally, at Alif we have been intentional about bridging the gap between high-performance AI and the low-power embedded world. By integrating advanced NPUs and hardware acceleration for transformer models into our MCUs, we have given developers the ability to bring generative AI and other advanced capabilities into devices much sooner than the market initially expected. This convergence of better hardware, smarter software, and real-world demand is why edge AI is here today, not years from now.

3. As generative AI comes to edge devices, there’s a lot of talk about avoiding vendor lock-in and sticking with open tools and standard NPUs. How is Alif making sure developers aren’t tied down to one ecosystem, but can still get top performance from your chips?

In the same way that developers avoid CPUs using proprietary instruction sets to avoid being locked into eco-systems with limited tool availability, we expect that commonality in the NPU architecture will enable more selections for developers to choose from, and a greater desire for eco-system partners to invest time and effort into support for the technology, as they will have a larger userbase for the innovations they make. All of Alif’s products are scalable and software and pin compatible, to ensure that developers have a seamless experience when upgrading to a more powerful chip for their next generation devices.

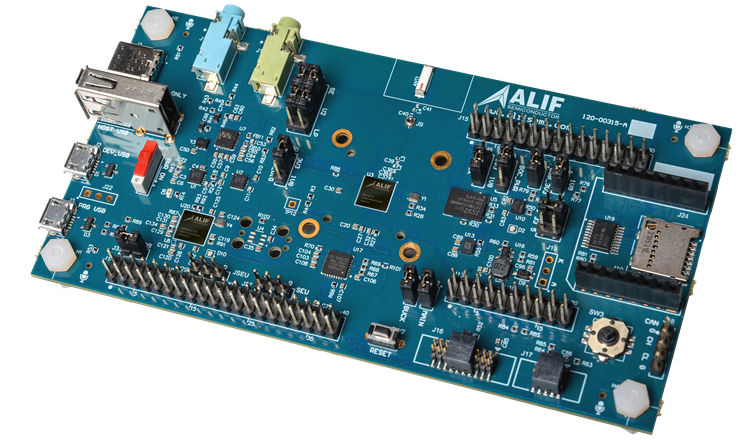

4. The new Ensemble MCUs are said to handle on-chip generative AI workloads. What does this practically mean for developers and end-users and would you like to elaborate on your latest Ensemble MCUs?

E4, E6, and E8 range from dual core to quad core microcontrollers and are based off the same well-known Ensemble architecture, but feature an improved high-throughput memory architecture and an upgraded neural processing unit (NPU), the Ethos ™ -U85. In an industry first for microcontrollers, Alif’s new Ensemble series feature class leading system architecture that includes hardware acceleration for transformer networks, requiring only 36mW for SLM model execution. Alif’s latest hardware sets new standards for imaging applications, performing power efficient object detection in less than 2ms, and image classification in less than 8ms.

This unlocks hardware acceleration for transformer networks enabling generative AI workloads at the Edge. Transformer networks enhance endpoint applications by quickly contextualizing new data with key insights from past inputs. For localized use this results in instant, accurate results when using multimodal AI in endpoint products focused on vision, voice, text, and sensor fusion, without relying on the cloud.

The E4 is a dual core microcontroller that contains the Ethos-U85, an efficient NPU for Generative AI/ML acceleration supporting Recurrent Neural Networks, Convolutional Neural Networks, and Transformer Networks. It includes a total of two Cortex-M55 MCU cores and three Ethos NPU cores capable of accelerating machine learning performance and power efficiency. It integrates up to 5.5MB of on-chip MRAM and 9.75MB of on-chip SRAM.

The E6 is a triple-core fusion processor that provides even more performance than the E4 with the addition of one Cortex-A32 core. This enables high-level operating systems, like Linux, to be used in parallel with real-time systems.

The E8 is a quad-core fusion processor and the class leading series of the Ensemble family, offering maximum performance. E8 builds brings on a second Cortex-A32 to support the most demanding applications. With the addition of Ethos-U85, the Ensemble family delivers up to 454 GOPS of machine learning performance in total.

5. Many big MCU manufacturers are now adding NPUs to their devices. How does Alif maintain its competitive edge in this increasingly crowded space?

Alif is leveraging its knowledge from being first to market four years ago with MCUs and fusion processors using the Ethos-U55 NPU to enhance our new Ethos-U85 based products. This enables us to maintain a significant lead over competing suppliers who will be introducing their own Ethos-U85 products.

Alif stands apart by designing the entire system architecture to support edge AI, not just integrating an NPU. We use up to three NPUs in our new devices to apply the appropriate compute resource(s) for the immediate task at hand for maximum power efficiency. For example, the MCU is architected to have a robust, low-power, always-on region of the chip with adequate memory and interfaces to collect data (vision, voice, vibration), perform substantial CPU and NPU tasks to efficiently make initial classification of what was detected, and then progressively wake other portions of the chip as needed. Those remaining portions of the chip can execute very heavy ML workloads on additional CPU/NPU pairs that are now accelerated even more than before by the Ethos-U85 and then quickly go back to sleep. It’s also in this high-performance region of the chip where Alif has learned from being first in the market what functions and interfaces are needed to satisfy target use cases in terms of the amount of on-chip memory (Alif is leading the market with on-chip memory size in this class of product), a tiered approach to rapidly accessing external memory, heavy image and audio processing capability, driving displays with graphics acceleration, high-speed interfaces, and handling analog signals coming into and out of the chip. All this capability is wrapped up in a very granular power management scheme that automatically throttles what portions of the chip are powered on or off based on instantaneous workload.

Alif crafted these valuable functions and capabilities not into a single Ethos-U85 point-product, but into multiple compatible and scalable MCUs and fusion processors to extend the existing Ensemble family of devices. This includes software compatibility with the previous 19 Ensemble devices based on Ethos-U55 to span a wide range of applications with software re-use. There is also hardware compatibility with 8 previous Ensemble devices in the BGA194 package. That means drop-in footprint compatibility so developers can upgrade to Ethos-U85 with no circuit board changes.

These stout capabilities that can run a long time on very small batteries, and a carefully planned evolution of compatible devices are what set Alif apart from other MCU manufacturers adopting the Ethos-U85. This is a direct result of being first on the market four years ago.

6. What kind of real-world applications will benefit most from on-chip generative AI in MCUs?

We are already seeing Generative AI make its way into real-world applications across a variety of industries. One particularly interesting and accessible example is in the world of children’s toys. These new Ensemble series toys use embedded Generative AI models to interact with their surroundings and create dynamic, engaging experiences.

For instance, some AI-enabled toys can recognize objects, respond to voice commands, and even generate personalized stories based on a child’s environment or previous interactions. Instead of relying on pre-recorded scripts, these toys use small language models (SLMs) to craft original narratives in real time, adapting the tone, characters, and plot based on the child’s age, preferences, or emotional cues. This not only enhances the educational and entertainment value of the toy, but also supports deeper engagement, creativity, and learning through play.

For all toy manufacturers, security is of utmost importance in the realm of children using toys and games that are connected to a public network. When these products are online, the connection can be hacked for nefarious purposes putting children and families at risk. Eliminating or vastly reducing the time connected to the network significantly lowers the chance of intrusion. Toys and games that have local Generative AI capability with little or no need to be network-connected are an ideal solution.

As these capabilities become more efficient and power-optimized, we can expect to see Generative AI integrated into a wide range of smart consumer products beyond toys—unlocking new forms of interaction and intelligence at the edge.

7. Looking ahead, what is the future of MCUs in the era of edge AI, and what should engineers be prepared for as this technology evolves?

Besides compute architectures that will continue to appear capable of more work per clock that also bring more power-efficiency, a heavy emphasis will be on evolving memory.

MCUs have traditionally used familiar and similar implementation of on-die memory to support the CPU core(s) over many years. With the recent addition of on-die neural processing accelerated by NPU cores, fast and efficient data mobility has driven the need for wider and faster pathways in the data bus structure connecting cores and peripherals on the die as well as reaching outside the die for connecting external memory components. Alif has already taken this approach for our new AI/ML devices and we’re currently designing our next approach with the goals of providing developers with enough volatile and non-volatile memory to keep pace with ever-growing memory density needs driven by complex ML models and SLMs, to keep power consumption to a minimum, to enable efficient memory expansion outside of the chip if needed, to maintain high levels of IP and content security, and to keep the cost and physical footprint size to a minimum. Without evolving memory solutions to reach these goals it will not be possible to have a high concentration of compute capacity within a very small physical space that can be powered by small batteries for a long time … which ultimately will be required by AI/ML-enabled wearable and portable products that people find useful and practical.