Fujitsu Laboratories Ltd. and Kumamoto University today announced the development of technology to easily create the training data necessary to apply AI to time-series data, such as those from accelerometers and gyroscopic sensors.

Time-series data obtained from sensors does not include anything other than every-changing numerical data. Therefore, in order to create training data for use in machine learning, it was necessary to manually attach finely detailed labels to the data in accordance to its changing values, indicating what was done and when at each point where the numerical values changed. For this reason, huge numbers of man-hours were required, and the use of AI with time-series data had seen limited progress.

Now, Fujitsu Laboratories and Kumamoto University have enabled the automatic creation of highly accurate training data with appropriate labels for each action, just by manually attaching a single label to each longer time period, even if they include multiple actions, indicating the major action in that time period according to human judgement. Because this significantly reduces the number of man-hours required, this technology will accelerate the use of AI with time-series data. The new development is expected to enable easier installation of services such as fall detection, operational functionality checks, and abnormality detection for machines, in smartphones and various other devices.

Development Background

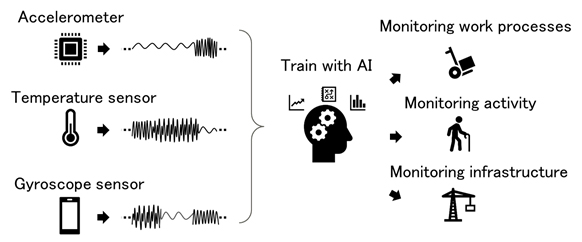

In recent years, with the evolution of technology such as the Internet of Things (IoT), it has become possible to obtain a large amount time-series data from a variety of sensors. For example, by developing a functionality in which AI can determine the meaning of the motions of people and objects from the characteristics captured by accelerometers, it is expected that advanced functionality for monitoring people and machines can be incorporated into smartphones and various equipment. In order to apply AI to this sort of time-series data, it is necessary to create training data to train AI.

Figure 1: Examples of AI monitoring using time-series data

Issues

Time-series data obtained from sensors consists of just numbers recording values from the sensors moment by moment, so it is necessary to attach meaning to the data indicating “what” (labels) and “when” (segments) in creating training data for AI. For example, data from accelerometers when a person goes running includes intermingled data from when a person is running, when they are walking, and when they are standing still. So in order to create training data for AI, the data needs to be separated into segments, and labeled as “Running” “Walking” and “Stopped.”

Conventionally, to create this sort of training data, the typical process was to record a video of the behavior while measuring the time-series data, identify the type of behavior seen with the changes in the numerical values at a second-by-second level, and manually attaching the labels. Because this process required a significant amount of work and time, the application of AI to time-series data saw limited progress, and there was a demand for a technology to automate the labeling process and reduce the workload.

About the Newly Developed Technology

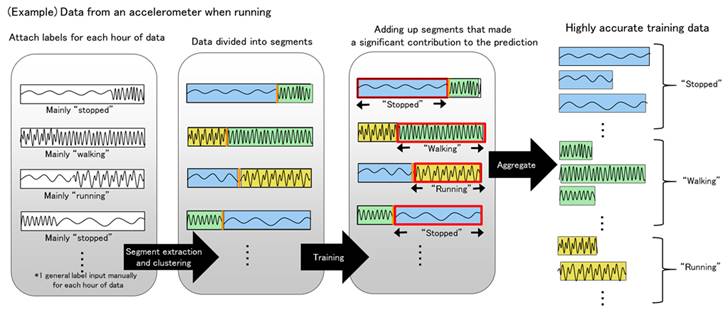

Now, Fujitsu Laboratories and Kumamoto University have developed a technology that can automatically create highly accurate training data that enables the use of AI with time-series data, just by inputting a label expressing the main action underway over a longer time segment (for example, one hour). The features of this newly developed technology are as follows.

- Extracting appropriate segments

Looking at time-series data, this technology can learn characteristics of times when the same activity is ongoing and characteristics of times when the activity changes, and can then automatically extract appropriate time periods from time-series data with actions based on same characteristics.

- Highly accurate labeling

With this technology, users attach a single broad label for long segments of data (for example, one hour), such as “running” if the majority of the segment is spent running. After a deep neural network is trained to predict such labels and the resulting estimated labels can be used to calculate the segment of the time-series data that most contributed to that prediction. Also by adding up the time periods that have a high degree of contribution as label candidates, this system can create training data capable of accurate prediction.

Figure 2: Diagram of the newly developed technology

Effects

Fujitsu Laboratories and Kumamoto University conducted a trial where they attached labels to time-series data from accelerometers while performing mock work processes in a factory such as polishing. As a result, they confirmed that this technology was able to correctly label 92% of time periods. They judged that this was equivalent to the highly accurate results obtained when using data that was manually labelled in detail as training data.

With this technology, one can easily create training data for AI use from time-series data, and further development is expected for the functionality that uses AI to determine activities captured by sensors. In addition, because this technology makes determinations based on just the numerical characteristics of time-series data, and does not rely on the type of sensor, it could also be applied to time-series data obtained from devices such as temperature sensors and pulse wave sensors.

Future Plans

Fujitsu Laboratories and Kumamoto University aim to conduct field trials using time-series data from a variety of fields, with the goal of commercializing this technology as a preprocessing technology for time-series data as part of Fujitsu Human Centric AI Zinrai, Fujitsu Limited’s AI technology, during fiscal 2019.