Fujitsu Leverages Deep Learning to Achieve World’s Most Accurate Recognition of Complex Actions & Behaviors Based on Skeleton Data

Fujitsu Laboratories Ltd. announced the development of a technology that utilizes deep learning to recognize the positions and connections of adjacent joints in complex movements or behavior in which multiple joints move in tandem. This makes it possible to achieve greater accuracy in recognizing, for instance, when a person performs a task like removing objects from a box. This technology successfully achieved the world’s highest accuracy against the world standard benchmark in the field of behavior recognition, with significant gains over the results achieved using conventional technologies, which don’t make use of information on neighboring joints.

By leveraging this technology to perform checks of manufacturing procedures or unsafe behavior in public spaces, Fujitsu aims to contribute to significant improvements in public safety and in the work place, helping to deliver on the promise of a safer and more secure society for all.

Fujitsu will present the details of this technology at the 25th International Conference on Pattern Recognition (ICPR 2020), which is being held online from January 10th, 2021 (Sunday) to January 15th, 2021 (Friday).

Background

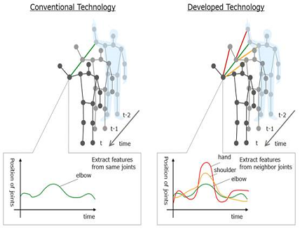

In recent years, advances in AI technology have made it possible to recognize human behavior from video images using deep learning. This technology offers a variety of promising applications in a wide range of real-world scenarios, for example, in performing checks of manufacturing procedures in factories or detecting unsafe behavior in public spaces. In general, human behavior recognition utilizing AI relies on temporal changes in the position of each of the skeletal joints, including in the hands, elbows, and shoulders, as identifying features, which are then linked to simple movement patterns such as standing or sitting.

With time series behavior-recognition technology developed by Fujitsu Labs, Fujitsu has successfully realized highly-accurate image recognition using a deep learning model that can operate with high-accuracy even for complex behaviors in which multiple joints change in conjunction with each other, such as removing objects from a box during unpacking.

About the Newly Developed Technology

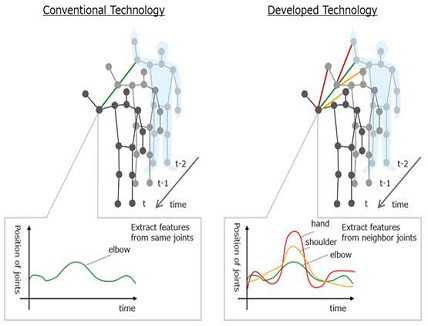

Complex movements like unpacking involve hand, elbow, and shoulder joints moving in tandem with the arm bending and stretching. Fujitsu has developed a new AI model for a graph convolutional neural networks that performs convolution operation of the graph structure by adopting a graph consisting of edges connecting adjacent joints based on the structure of the human body with the joint position as a node (Vertex). By training this model in advance using the time series data of joints, the connection strength (Weight) with neighboring joints can be optimized, and effective connection relationships for behavior recognition can be acquired. With conventional technologies, it was necessary to accurately grasp the individual characteristics of each joint. With an AI model that has already been trained, the combined features of the adjacent joints that are linked can be extracted, making it possible to achieve highly-accurate recognition for complex movements.

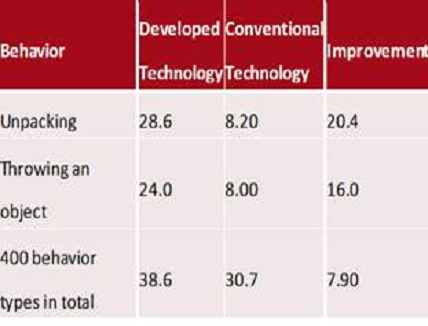

This technology was evaluated against the world standard benchmark in the field of behavior recognition using skeleton data, and in the case of simple behaviors such as standing and sitting in the open data set, the accuracy rate was maintained at the same level as that of conventional technology that does not use information on neighboring joints. In the case of complex behaviors like a person unpacking a box or throwing an object, however, the accuracy rate improved greatly, to achieve an overall improvement of more than 7% over the conventional alternative to reach the world’s highest recognition accuracy.

Future Plans

By adding the newly developed AI model for recognizing complex behaviors obtained with this technology to the 100 basic behavior already accommodated by Fujitsu’s behavioral analysis technology “Actlyzer,”, it will become possible rapidly deploy new, highly-accurate recognition models. Fujitsu ultimately aims to leverage this new capability to roll out the system in fiscal year 2021, and contribute to the resolution of real-world issues to deliver a safer and more secure society.