NR1-P – A novel AI-centric inference platform based on a new type of System-on-Chip (SoC) to empower the growth of real-life AI applications

NeuReality has unveiled NR1-P, a novel AI-centric inference platform. NeuReality has already started demonstrating its AI-centric platform to customers and partners. NeuReality has redefined today’s outdated AI system architecture by developing an AI-centric inference platform based on a new type of System-on-Chip (SoC).

NR1-P is NeuReality’s first implementation of the new AI-centric architecture, with other implementations to follow. The new prototype platform validates the technology and allows customers to integrate it in orchestrated data centers and other facilities. The company is highly focused on its follow-on NR1 SoC, which will leverage its unique fusion architecture to bring a 15X improvement in performance per dollar compared to the available GPUs and ASICs offered by deep learning accelerator vendors.

The new announcement follows NeuReality’s emergence from stealth in February 2021, with $8M seed investment from Cardumen Capital, OurCrowd and Varana Capital, and the appointment of AI luminary Dr. Naveen Rao, former General Manager of Intel’s AI Products Group, to NeuReality’s Board of Directors.

The novel prototype platform aims to empower the growth of real-life AI applications such as public safety, e-commerce, social networks, medical use-cases, digital assistants, recommendation systems and Natural Language Processing (NLP).

The NR1-P solution targets cloud and enterprise datacenters, alongside carriers, telecom operators and other near edge compute solutions. NeuReality expects these enterprise customers to hit scalability and cost barriers soon after completing their shift from pilots to production deployment of their AI use cases.

NR1-P is expected to enrich its users with cognitive computing capabilities, removing the scalability and total cost of ownership barriers that are associated with AI infrastructure today. At the same time, the AI-centric platform will lower the power consumption overhead and footprint waste, democratizing access to AI infrastructure at scale. The platform allows infrastructure owners and cloud service consumers to deploy intensive deep learning use cases at a large scale.

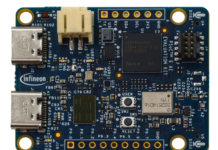

NeuReality’s NR1-P is built in a 4U server chassis equipped with sixteen Xilinx Versal Adaptive Compute Acceleration Platform (ACAP) cards, highly integrated and fully software programmable compute platforms that can adapt to evolving and diverse algorithms and go far beyond traditional FPGAs. The NR1-P SoC leverages these capabilities to implement the AI-centric compute engine while fusing unique system functions with datapath functions and the neural network processing function. All these come together to optimize critical AI-inference flows while removing system bottlenecks.

Moshe Tanach, NeuReality’s Founder and CEO, commented: “Datacenter owners and AI as-a-service customers are already suffering from the operational cost of their ultra-scale use of compute and storage infrastructure. This problem is going to be further aggravated when their AI based features will be deployed in production and widely used. For these customers, our technology will be the only way to scale their deep learning usage while keeping the cost reasonable. Our mission was to redefine the outdated system architecture by developing AI-centric platforms based on a new type of System-on-Chip. Our platforms provide ideal compute solutions for these new types of AI use cases. With NR1-P, customers can finally experience this new type of solution, leverage it in their solutions, and prepare to integrate our upcoming NR1 product once it is in production”.

According to NeuReality’s benchmarks testing, the NR1-P prototype platform delivers more than a 2X improvement in Total Cost of Ownership (TCO) compared to NVIDIA’s CPU-centric platforms running disaggregated deep learning service. The current achievement also paves the way towards additional dramatic improvements that will be integrated into NR1, NeuReality’s flagship product.

“Our technology provides a huge reduction in both CAPEX and OPEX for infrastructure owners. Customers using large scale use cases of image processing such as object detection or face recognition can already get 50% saving in the cost of the technology or twice the capacity for the same price when using NR1-P. The market is expecting us, semiconductor and system vendors, to support the investment in the future of AI and we at NeuReality are determined to deliver on these expectations so we may all leverage the promise of AI for a smarter, safer and cleaner world,” said Moshe Tanach.

Xilinx enthusiastically supports NeuReality’s product. Sudip Nag, Corporate Vice President for Software and AI Products at Xilinx, said: “We have been working closely with NeuReality to bring this new class of server to market. Combining the adaptability and low latency capabilities of Versal ACAP with NeuReality’s IP solves existing AI scalability challengesthat currently inhibit the growth of the AI inference market.”