STMicroelectronics announced the world’s first MCU AI Developer Cloud which enables embedded developers and data scientists to develop their edge AI applications faster and with ease. Our editor Pratibha Rawat had a candid interview with Vincent RICHARD, STM32 AI Product Marketing Manager, STMicroelectronics. He highlighted striking features of STM32Cube.AI Developer Cloud and what is driving the adoption of AI in MCUs. He also talked about the STM32 Model zoo which can greatly ease machine-learning (ML) workflow.

Electronics Media: ST offers a complete ecosystem to embedded developers to incorporate AI into their designs. What are the main benefits or features of STM32Cube.AI Developer Cloud?

Vincent RICHARD : We can summarize the main features and benefits in 3 points:

- The STM32Cube.AI Developer Cloud is an online secured platform accessible through myST credentials => Direct access – No download, no installation required… and free-of-charge.

- It provides a development jump start from the ST Model zoo, a repository of trainable deep-learning models and demo => speed up model selection and application development

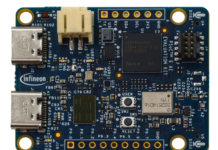

- And it delivers the world’s first online benchmarking service for edge-AI neural networks on STM32 MCUs. It features a cloud-accessible board farm that will be refreshed regularly with a broad range of STM32 MCUs. => Enables to remotely measure and get AI code performance of optimized NN models for data scientists and developers.

STM32Cube.AI Developer Cloud offers a platform, services, and APIs well-suited for a seamless integration in existing data scientist and embedded AI developer workflow.

STM32Cube.AI Developer Cloud is now freely available to registered MyST users.

Electronics Media: What is driving the growing interest in embedding AI into MCUs?

Vincent RICHARD: The key drivers for adoption of AI into microcontrollers are multiple:

• Accuracy: AI can deliver better performance and versability versus rule-based methods

• Privacy-by-design: It ensures data privacy compared to Cloud-based AI as processing is performed locally (on-chip).

• Frugality: Low data-transmission and optimized AI run locally leads to substantial energy savings.

• Availability of an AI ecosystem for MCUs. Access to tools and solutions to easily develop and integrate optimized AI (i.e STM32Cube.AI Developer Cloud and NanoEdge AI Studio for STM32)

Embedded AI adoption into microcontrollers opens the doors to new types of functionalities by end-customers. The added value for the final product is substantial with almost no penalty on price and a positive impact on efficiency; it reduces power consumption.

We have listed a selection of application examples (computer vision, predictive maintenance, audio event detection…) and associated benefits of running AI on STM32s at https://stm32ai.st.com/use-cases/

Electronics Media: STM32Cube.AI Developer Cloud is said to shorten time to market, can you brief about its main process steps? Also tell us about STM32 model zoo?

Vincent RICHARD: STM32Cube.AI Developer Cloud offers a graphical step-by-step process to ease and accelerate implementation of AI on STM32 MCUs. Key steps are:

Load NN model

Load a user trained model or one from the STM32 model zoo. The user trained model can come from most popular AI frameworks: Tensorflow, Pytorch, ONNX…

Optimize NN model

Select a model optimization option. Typically, users balance between the RAM or latency as the most critical parameter.

Quantize

Perform post-training quantization to further reduce NN model parameter size

Benchmark

Access the online benchmark service to remotely evaluate AI performance on various STM32 boards.

Results

Visualize and optionally export the performance benchmark report

Generate code

Generate optimized AI code in C-code or in a format compatible with the STM32CubeMX software suite

The STM32 AI model zoo is a collection of pre-trained machine learning models that are optimized to run on STM32 microcontrollers. Available on GitHub, this is an invaluable resource for anyone looking to add AI into STM32-based projects.

In details:

• A large collection of application-oriented models are ready for re-training

• Scripts to easily retrain any model from user datasets

• Application code examples automatically generated from user AI models

Electronics Media: AI on the edge is the future. What is your perspective on this?

Vincent RICHARD : Yes, Edge AI is here to stay!

At ST we believe AI is not just a trend but a major disruption in the way objects interpret the vast amounts of data from always more sensors. This major transformation involves:

- Hardware with ST’s own design AI acceleration engine that is able to reach much higher AI performance at a low-power budget

- Low-level stack adaptation i.e the Helium M-Profile Vector Extension from Arm® enabling Machine Learning performance boosts

- AI tool and software ecosystem to support the evolution and new challenges of the MLOps flow with, for instance, Jupyter Notebooks, comprehensive Model zoo, training scripts, and application code examples

These developments represent many exciting fields where we are committing to our customers to offer solutions in the future… and this is just the beginning of the journey!