Supports TensorFlow Lite & Lattice Propel for Embedded Processor-based Designs;

Includes New Lattice sensAI Studio Tool for Easy ML Model Training

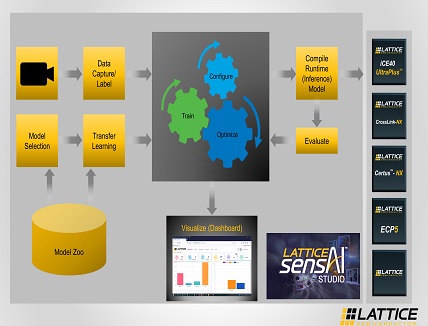

Lattice Semiconductor Corporation announced enhancements to its award-winning Lattice sensAI solution stack for accelerating AI/ML application development on low power Lattice FPGAs. Enhancements include support for the Lattice Propel design environment for embedded processor-based development and the TensorFlow Lite deep-learning framework for on-device inferencing. The new version includes the Lattice sensAI Studio design environment for end-to-end ML model training, validation, and compilation. With sensAI 4.0, developers can use a simple drag-and-drop interface to build FPGA designs with a RISC-V processor and a CNN acceleration engine to enable the quick and easy implementation of ML applications on power-constrained Edge devices.

There is growing demand in multiple end markets to add support for low power AI/ML inferencing for applications like object detection and classification. AI/ML models can be trained to support applications for a range of devices that require low-power operation at the Edge, including security and surveillance cameras, industrial robots, and consumer robotics and toys. The Lattice sensAI solution stack helps developers rapidly create AI/ML applications that run on flexible, low power Lattice FPGAs.

“Lattice’s low-power FPGAs for embedded vision and sensAI solution stack for Edge AI/ML applications play a vital role in helping us bring leading-edge intelligent IoT devices to market quickly and efficiently,” said Hideto Kotani, Unit Executive, Canon Inc.

“With support for TensorFlow Lite and the new Lattice sensAI Studio, it’s now easier than ever for developers to leverage our sensAI stack to create AI/ML applications capable of running on battery-powered Edge devices,” said Hussein Osman, Marketing Director, Lattice.

Enhancements to the Lattice sensAI solution stack 4.0 include:

- TensorFlow Lite – support for the framework reduces power consumption and increases data co-processing performance in AI/ML inferencing applications. TensorFlow Lite runs anywhere from 2 to 10 times faster on a Lattice FPGA than it does on an ARM® Cortex®-M4-based MCU.

- Lattice Propel – the stack supports the Propel environment’s GUI and command-line tools to create, analyze, compile, and debug both the hardware and software design of an FPGA-based processor system. Even developers unfamiliar with FPGA design can use the tool’s easy-to-use, drag-and-drop interface to create AI/ML applications on low power Lattice FPGAs with support for RISC-V-based co-processing.

- Lattice sensAI Studio – a GUI-based tool for training, validating, and compiling ML models optimized for Lattice FPGAs. The tool makes it easy to take advantage of transfer learning to deploy ML models.

- Improved performance – by leveraging advances in ML model compression and pruning, sensAI 4.0 can support image processing at 60 FPS with QVGA resolution or 30 FPS with VGA resolution.