This year, even the biggest corporations and governmental institutions, including the US, were not immune to hacks. According to Cyble’s latest Global Cybersecurity Report 2025, almost 15,000 incidents related to data breaches and leaks were reported.

2026 will be marked with even more breaches, as AI tools enable hackers to target thousands with a single click, cybersecurity experts warn.

Looking back in 2025, one of the biggest hacks happened to the Australian airline Quantas. Hackers exposed data of 5 million customers, including names, birth dates, email addresses, and a few months ago started selling it on the dark web. There were many more similar cases involving companies like Oracle, Volvo, and SK Telecom, which led to data leaks or frozen business operations.

In the summer, security researchers uncovered the biggest data breach in history that exposed 16 billion passwords, including those from Apple, Facebook, Google, Telegram, and many more. Some attacks affected governmental institutions, where, recently, the US Congressional Budget Office was hacked. According to Cyble’s report, government institutions were the Top 3 in the overall threat activity.

Cybercriminals also targeted users directly. Recently, more than 120,000 cameras were hacked for so-called “sexploitation” footage in South Korea.

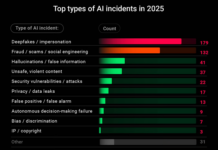

According to experts at Planet VPN, a free virtual private network (VPN) provider, this year, a significant portion of attacks were amplified by AI tools. Konstantin Levinzon, co-founder and CEO of the company, says this trend will pose even bigger risks in 2026.

”Even though AI improves our daily lives and strengthens cybersecurity, it is also widely used by hackers. Now, even those without technical expertise can buy tools on the dark web that target thousands of users with a single click. The rise of AI-powered tools will amplify all kinds of attacks, including phishing scams, ransomware, and exploiting vulnerabilities, and can even create attacks on its own,” Lenvizon says.

Prediction 1: AI cybercriminals

Up until now, AI has been just a tool for cybercriminals, allowing them to organise and speed up attacks, he says. However, with rising agentic AI capabilities, AI will inevitably start attacking autonomously.

In its recent report, Anthropic has already described a hacking campaign that carried out around 80-90% part of the operation on its own using the company’s Claude tools.

“AI tools will scan for weaknesses and exploit zero-day flaws – security gaps that are unknown to vendors – without a human touching a keyboard. As our homes, workplaces, and infrastructure are increasingly run by AI, any security gap becomes a potential attack vector. We will almost certainly see such autonomous attacks next year,” Lenvinzon says.

Prediction 2: Hyper-realistic deepfakes

Deepfakes – AI-generated fake videos, audio files, or images used to impersonate people – are becoming a headache for banks and other businesses, as they allow bypassing online verification. Recently, an insurance company, sensing a lucrative opportunity, even started offering coverage for incidents where AI deepfakes cause reputational harm for companies.

Individual users are also at risk, Levinzon emphasizes. The FBI has recently warned users that criminals are generating fake images of kidnapping and using them for scams. According to Levinzon, the real rising threat is fake video-generated content.

“In 2025, video generators such as OpenAI’s Sora showed how easy it is to create highly realistic videos, and cybercriminals will use them to their advantage. As a result, banks and other financial institutions will likely take precautions to enhance their security measures to protect video verification processes. Regulations will likely follow quickly. For users, this may mean additional steps to confirm their identity,” he says.

Prediction 3: Digital body snatching

Millions of smartwatches, rings, AI wearables, and even new mattresses come equipped with large amounts of sensors that collect everything – from your location, to heart rate data, and stress levels. As the number of these sensors increases, they become attractive targets for cybercriminals, experts say.

According to Levinzon, once hackers get access to a smartwatch or any device, they can exfiltrate data easily, especially if the devices are not purely secured. Such data can also be gathered via cloud or app data leaks, exploiting Bluetooth attacks, and more.

”Potential wearable hacks, deepfakes, and autonomous AI systems mean that next year, users will need to take extra steps and security measures. Aside from staying vigilant, we also recommend enabling two-factor authentication, updating software regularly, and using a VPN, which adds an essential layer of defence against hackers,” Levinzon says.