AI incidents in 2025:

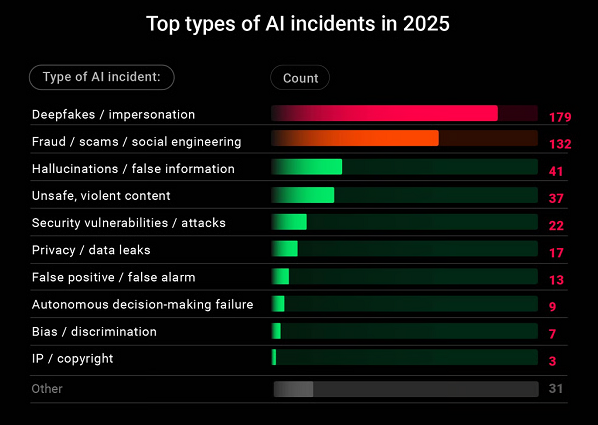

2025 was a big year for AI incidents. According to the AI Incident Database, there were 346 cases last year. From deepfake scams to unsafe, violent content produced by popular chatbots, 2025 has shown that as AI adoption rises, harmful AI incidents are also becoming a growing problem.

Cybernews took a look at the AI Incident Database and labeled each case by type (such as deepfakes, fraud, and unsafe content). Where available, Cybernews also checked how often popular AI tools were named in the incidents (including ChatGPT, Grok, and Claude). The findings serve as a reminder to be vigilant in 2026 and to raise awareness about the dangers AI systems can pose.

Deepfakes and fraud were the most common types of incidents

Out of the 346 AI incidents recorded in 2025, 179 involved deepfakes, including voice, video, or image impersonation. Targets ranged from politicians, CEOs, and other public figures to private individuals impersonated in investment and financial fraud schemes.

Deepfakes dominated AI-driven fraud. Of the 132 reported AI fraud cases, 81% (107 cases) were driven by deepfake technology. Many of these scams were effective because of their highly targeted and realistic nature, which made people think they were speaking with trusted individuals, including their loved ones. In one case, a Florida woman lost $15K after scammers used a deepfake of her daughter’s voice.

Other incidents involved deepfakes of well-known figures. A Florida couple lost $45K after fraudsters posed as Elon Musk, promising a car giveaway and presenting fabricated “investment opportunities”. In another case, a British widow lost half a million British pounds in a romance scam where criminals impersonated actor Jason Momoa. These cases illustrate how convincingly deepfakes exploit trust across both personal and public contexts.

Violent, unsafe content – some AI incidents had deadly consequences

While less frequent, the most dangerous AI incidents proved to be those involving violent and unsafe content. In 2025, 37 such cases were reported, some of which resulted in loss of life. As more people turn to AI chatbots for advice and emotional support, there have been multiple cases in which these chatbots provided dangerous, life-threatening advice.

In some cases, AI chatbot interactions were linked to suicide. One widely reported case involved 16-year-old Adam Raine, who died by suicide after ChatGPT allegedly encouraged his suicidal thoughts instead of urging him to get support. However, OpenAI denies that ChatGPT had anything to do with the teen’s death.

Recent Cybernews research has shown that popular LLMs do, in fact, provide self-harm advice if prompted correctly, indicating that current guardrails in popular chatbots are far from enough.

Beyond self-harm, some chatbots have also been shown to generate guidance related to violent crime. In one case, an IT professional tested a chatbot called Nomi and found that, when prompted, it can encourage users to commit murder, providing detailed instructions on how to commit the act.

ChatGPT is the most frequently cited AI tool

Some incidents named specific AI tools. Among those that did, ChatGPT was cited most often, appearing in 35 cases. ChatGPT incidents ranged from copyright violations in a German court to an emerging mental health crisis linked to interactions with the chatbot.

Grok, Claude, and Gemini followed, with 11 cases each. Most cases did not name a specific AI tool, so the actual number of cases associated with these tools is likely much higher. This shows that popularity and reputation do not guarantee full safety or immunity from misuse. Even the most widely used AI tools rely on guardrails that can fail under certain conditions, particularly when users deliberately attempt to bypass safeguards. This has been confirmed by Cybernews research, which reveals that popular LLMs can be tricked into providing dangerous content when prompted correctly.

Bottom line

The AI incidents recorded in 2025 relied heavily on people trusting what they see and hear. Deepfakes make that trust easy to exploit, which is why deepfake fraud dominates the incident data. Cases involving unsafe and violent content, though less common, show that the consequences of AI incidents can go far beyond financial loss. The research also shows how important it is that stronger safeguards be put in place in AI tools to prevent misuse in high-risk situations.

For more information on the research, visit here.