The first time I read Isaac Asimov’s I, Robot as a teenager, I was instantly fascinated by the humanoid robots in the stories. These machines could see, hear and interact with the surrounding world with human-like abilities. These astonishing capabilities fed my imagination. I even recall asking my dad if these machines could really “see.” Now, as an engineer, I understand the importance of machine vision and the power of “sight” for robotics.

The mobile-influenced applications have enabled the smart future we have imagined—smarter homes that are voice-controlled, smarter sensors to make smarter cities a reality, smarter factories where automation is solving many problems, and of course, smarter self-driving cars. Machine Vision, including cutting-edge volumetric capture techniques, is the enabling technology that’s fueling self-learning, decision-making, and autonomous systems.

Insight into Machine Vision System

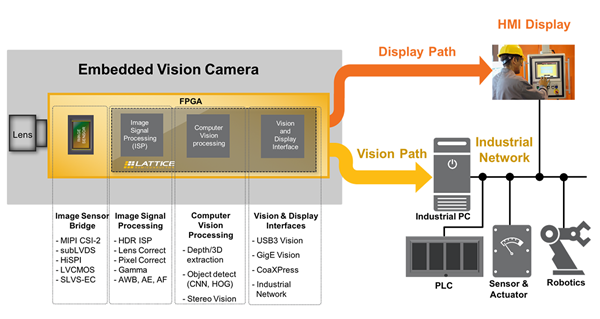

There are certain requirements of Machine Vision—and these include both hardware and software. One of the main requirements is image acquisition, using (mostly) cameras. Modern mobile processors, for example, are equipped with camera interfaces, like MIPI CSI-2 interfaces, and are utilized in many mobile-influenced applications. The camera sensors can simply grab an image and send the sensor data over to the processing unit using standard, custom interfaces.

Machine vision is no longer just capturing images and recognizing objects. Scientists and engineers are using this technology to make the machines smarter. Some of the examples include facial or object recognition and detection, distance or depth measurement using stereo vision, collision avoidance with objects for modern cars, and using electromagnetic spectrum to look for manufacturing defects on the factory floor. The processing unit in a machine vision system is essentially an advanced image processing and decision making unit.

This unit can perform a variety of operations on the captured raw image data, like image stitching and filtering, white balance adjustment, HDR adjustment, object edge detection, etc. The processing unit is capable of making decisions based on the processed image. These decisions could be autonomous (like activating a PLC, sensor, actuators or robots) or may require human intervention (like generating alerts on an HMI display).

Machine Vision Examples in the Real World

In the case of a bottling plant, an autonomous robot has to make sure that each bottle is filled with the right amount of liquid. In the past, this was done by simply weighing each filled bottle. Now, cameras (machine vision) are allowing the robot to monitor the product line, as well as provide visual feedback to the human supervisor. The robot is programmed to match the pattern to ensure each bottle is filled with right amount of liquid. Further, it can also use the color matching, transparency and opaqueness of the filled bottle to make sure there aren’t any contaminants that can impact the quality of the end-product.

The robot, in this case, is trained to perform a simple pattern recognition—making sure the bottle is filled and matches the color of the liquid. The cameras on these robots are constantly taking pictures of these bottles and the processing unit sets the parameters to matches the pattern required. If any bottle does not meet the ‘pass’ criteria, it is marked to be removed from the assembly line by another robot. This is a very simplistic example, where the processing unit is given set parameters, i.e., making sure the amount and the color of the liquid is right.

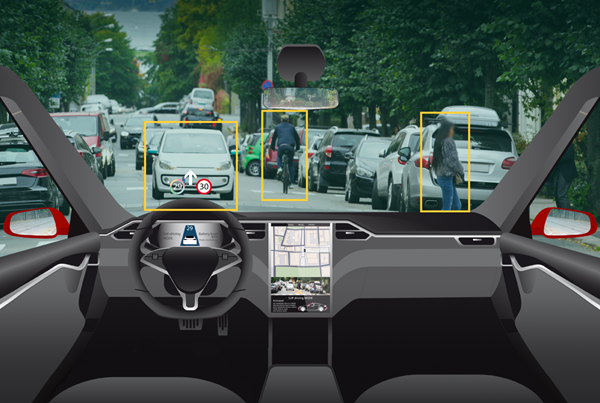

However, the machine vision system becomes exponentially more complex when applied to safety-critical systems, like ADAS or autonomous driving. In order to make autonomous cars a reality, the cars have to be able to see, hear and feel like a human being. Having started to teach a new driver how to drive recently, I can say that these complex machines have to mimic the human behavior. In order to do so, machines need decision making capabilities by observing the road, identifying hazards and making decisions as they drive. Machine vision is the key technology that allows our cars to see like human beings. When combined with machine learning, it is helping us make advancements towards autonomous driving.

Enabling Machine Vision

Machine Vision has been able to solve many problems using hardware or software (and a bit of a combination of both). The applications of tomorrow can be solved with the combination of dedicated computing function processing units and FPGA’s parallel processing capabilities will enable the next level of intelligence in the sensors at the Edge. Lattice’s Embedded Vision Development Kit brings together Lattice FPGAs that are optimized for low power and low cost in smaller footprint that will allow development of applications related to machine vision.

In Conclusion

When I look back 25 years, little did I know that the technology is slowly making progress towards what Asimov imagined in his stories of I, Robot. It is exciting to see that some of it will happen in my lifetime. Machines of today can indeed “see” and machine vision is making it all possible.

By: Jatinder (JP) Singh