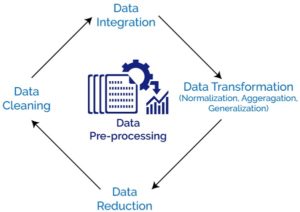

Data Preprocessing is a technique that used to improve the quality of the data before applied mining, so that data will lead to high quality mining results. Data processing technique can substantially improve the overall quality of the patterns mined and/or the time required for the actual mining. Data preprocessing include data cleaning, data integration, data transformation, and data reduction.

Data Cleaning: Data cleaning can be applied to remove noise and correct inconsistencies in the data.

Data integration: Data integration merges data from multiple sources in to a coherent data store, such as a data warehouse.

Data transformations: Data transformations such as normalization, may be applied for example, normalization may improve the accuracy and efficiency of mining algorithms involving distance measurements.

Data reduction: Data Reduction can reduce the data size by aggregating, eliminating redundant features, or clustering, for instance.

These techniques are not mutually exclusive. They may work together.

Why it is needed?

Incomplete, noisy, and inconsistent data are common place properties of large real world database and data warehouse. Incomplete data can occur for a number of reasons. Attributes of interest may not always be available, such as customer information for sales transaction important at the time of entry. Relevant data may not be recorded due to a misunderstanding, or because of equipment malfunctions. Data what where inconsistent with other recorded data may have been deleted. Furthermore recording of the history or modifications to the data may have been overlooked. Missing data, particularly for tuples with missing value for some mining results. Therefore to improve the quality of data and, consequently, of the mining results, data preprocessing needed.